Combining voxel and normal predictions for multi-view 3D sketching

Jan 1, 2019·, ,·

0 min read

,·

0 min read

Johanna Delanoy

David Coeurjolly

Jacques-Olivier Lachaud

Adrien Bousseau

Abstract

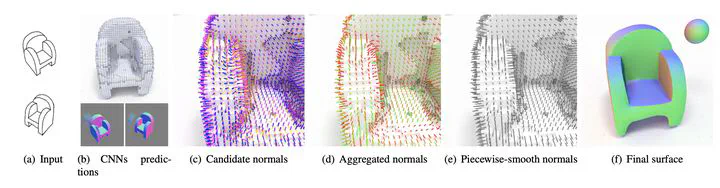

Recent works on data-driven sketch-based modeling use either voxel grids or normal/depth maps as geometric representations compatible with convolutional neural networks. While voxel grids can represent complete objects – including parts not visible in the sketches – their memory consumption restricts them to low-resolution predictions. In contrast, a single normal or depth map can capture fine details, but multiple maps from different viewpoints need to be predicted and fused to produce a closed surface. We propose to combine these two representations to address their respective shortcom- ings in the context of a multi-view sketch-based modeling system. Our method predicts a voxel grid common to all the input sketches, along with one normal map per sketch. We then use the voxel grid as a support for normal map fusion by optimizing its extracted surface such that it is consistent with the re-projected normals, while being as piecewise-smooth as possible overall. We compare our method with a recent voxel prediction system, demonstrating improved recovery of sharp features over a variety of man-made objects.

Type

Publication

Computers & Graphics, 82: 65-72, 2019

Deep Learning

Multi-View Reconstruction

Discrete Geometric Estimator

3D

Digital Geometry Application

Authors

Professor of Computer Science

My research interests include digital geometry, geometry processing, image analysis, variational models and discrete calculus.