Professor of Computer Science

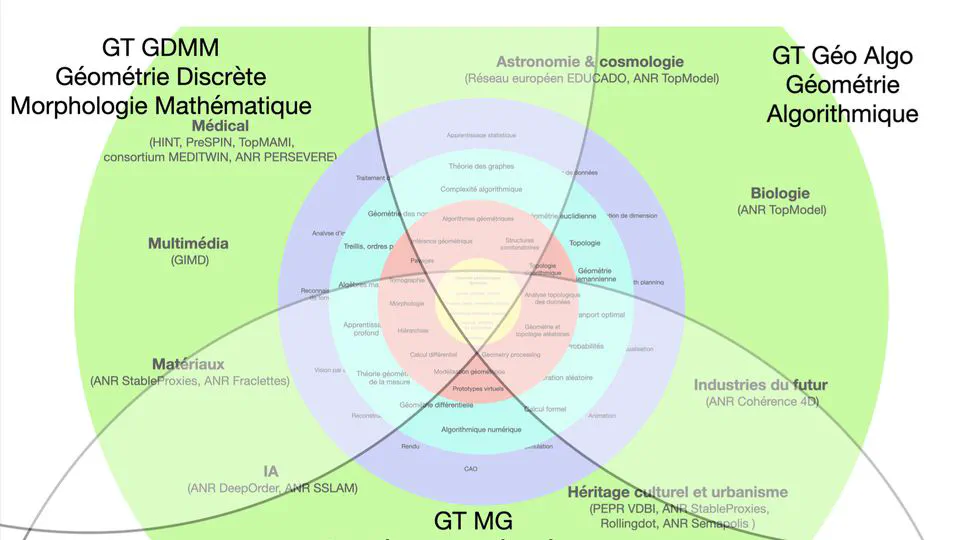

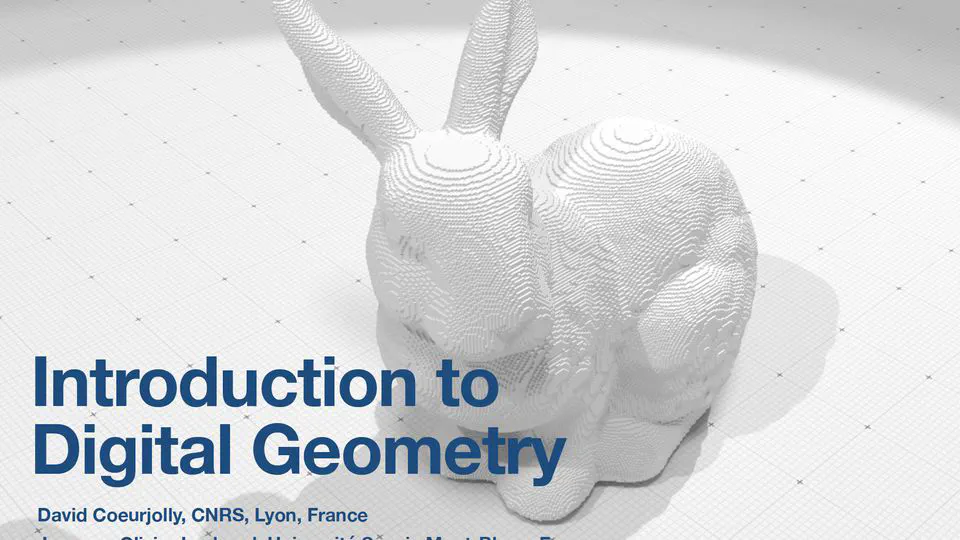

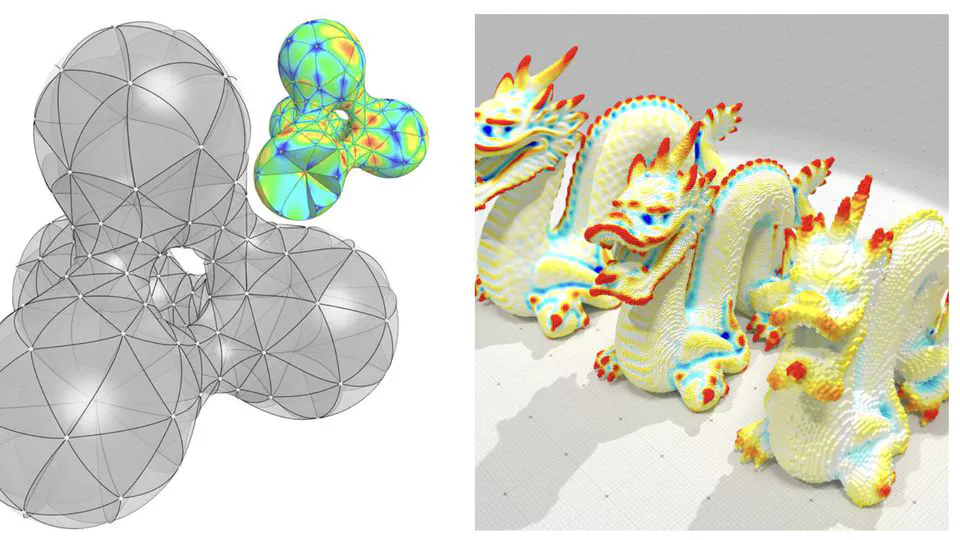

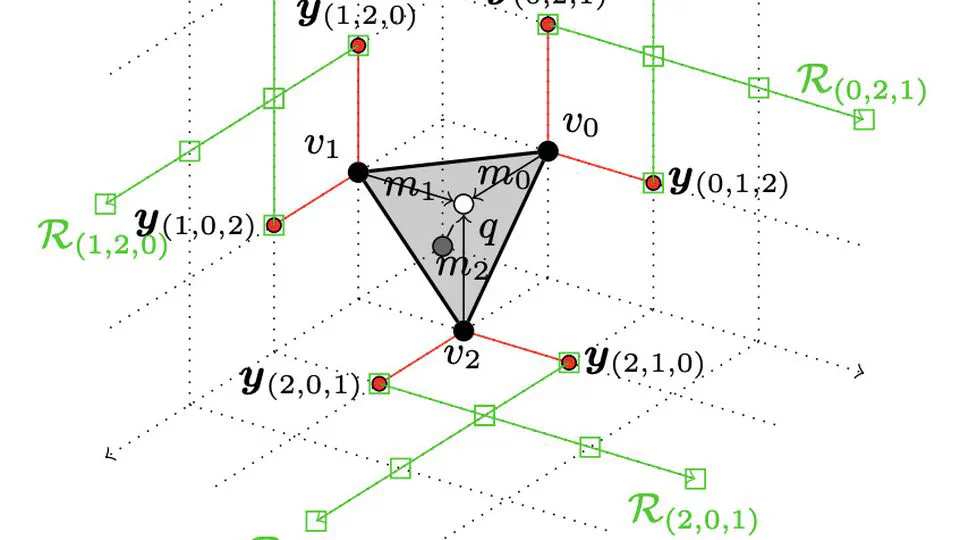

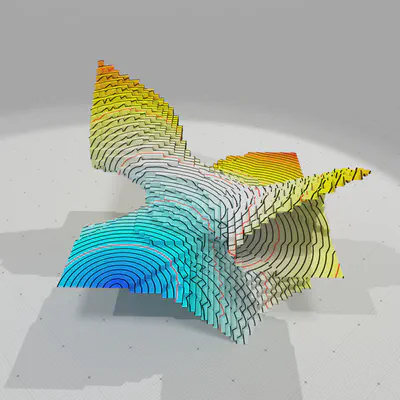

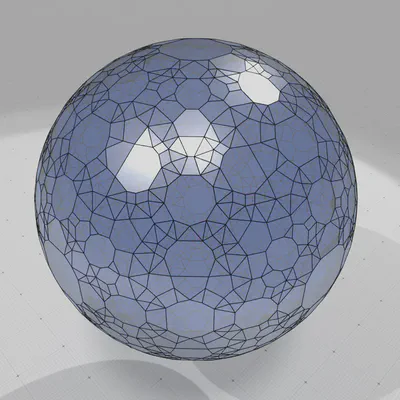

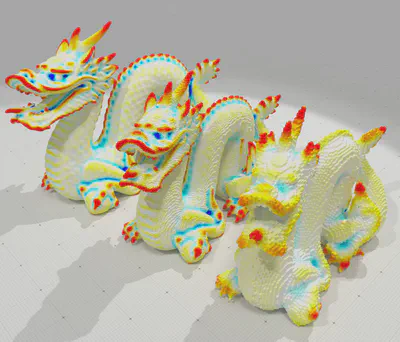

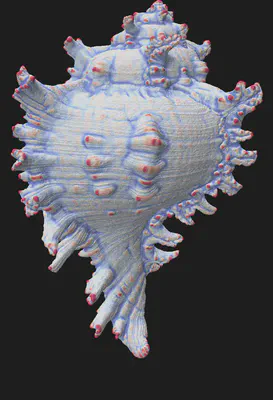

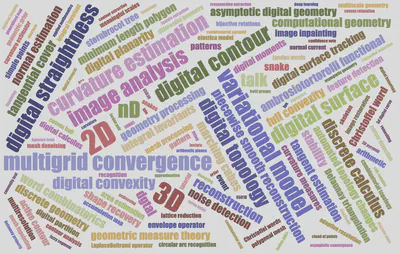

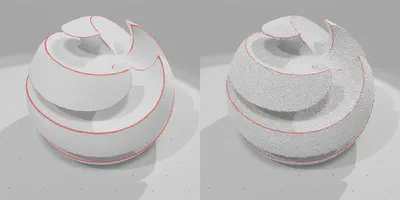

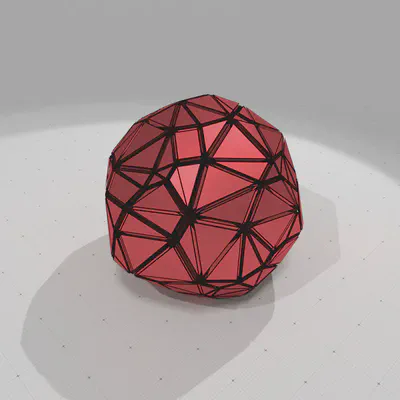

I am Professor of Computer Science at University Savoie Mont Blanc. I do my research in the Laboratory of Mathematics. I teach in the Computer Science department of UFR Science and Montagne. I was co-chair of the thematic year “Geometry” of the GdR IFM and IGRV during 2023-2024. I was head of the Cursus Master en Ingénierie Informatique till August 2024. My research interests include digital geometry and topology, image segmentation and analysis, geometry processing, variational models, and discrete calculus. Albeit not an expert, I am also quite fond of arithmetic, word combinatorics, computational geometry, combinatorial topology, differential geometry and I often use several related results in my research.

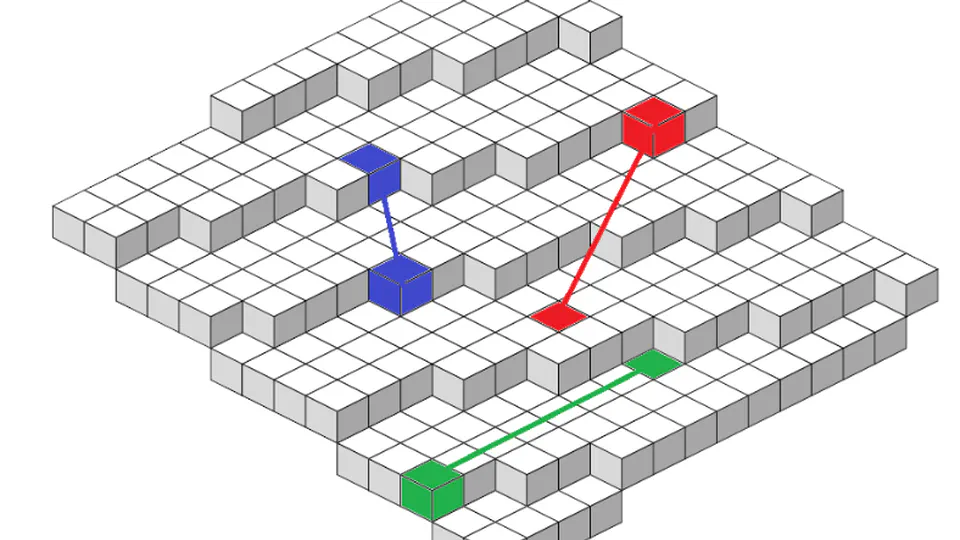

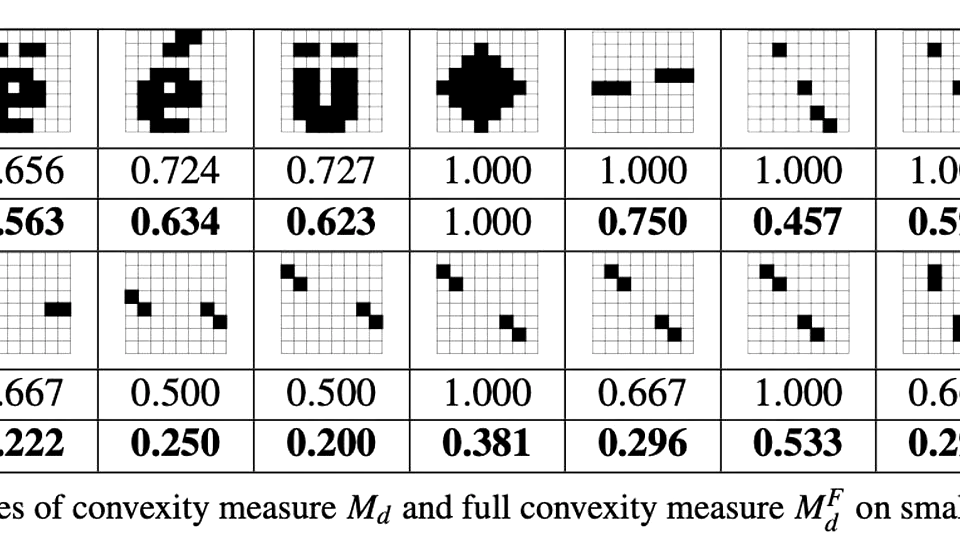

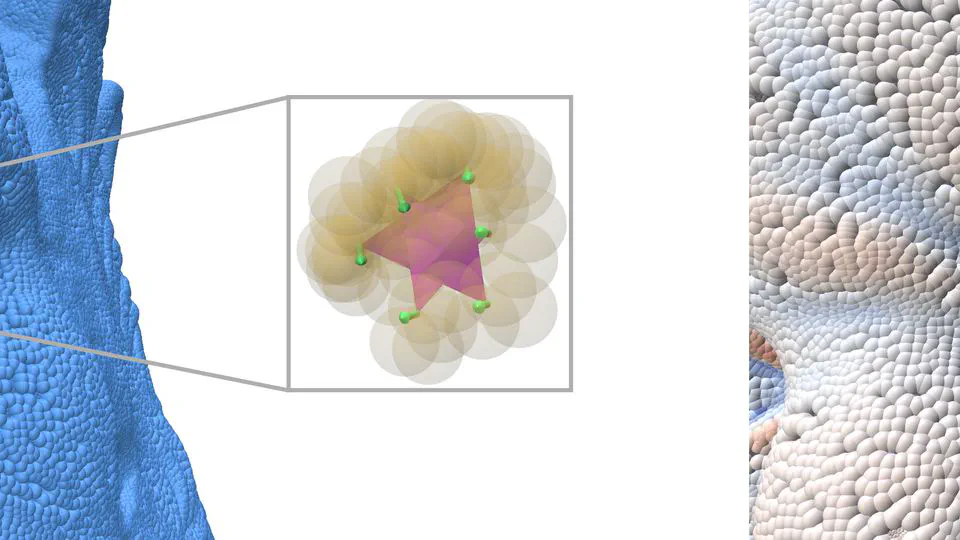

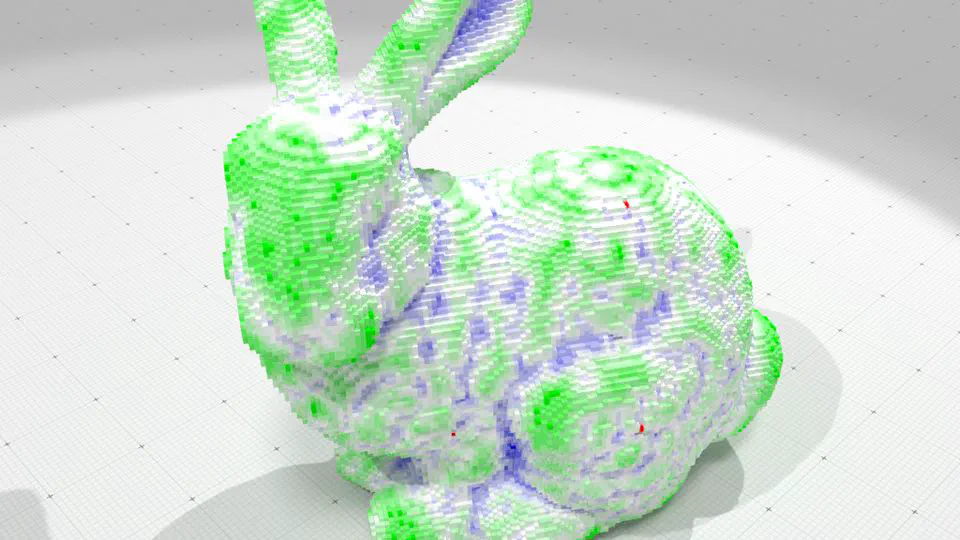

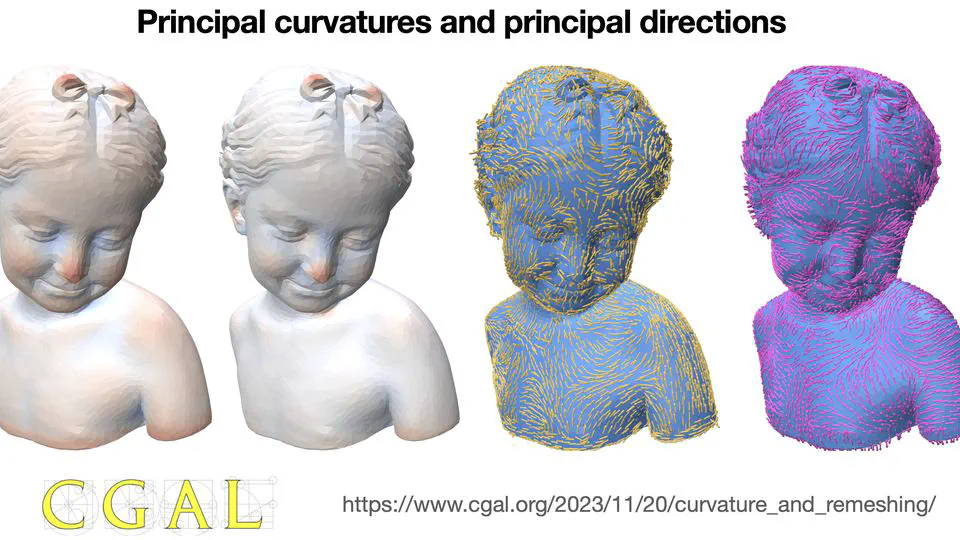

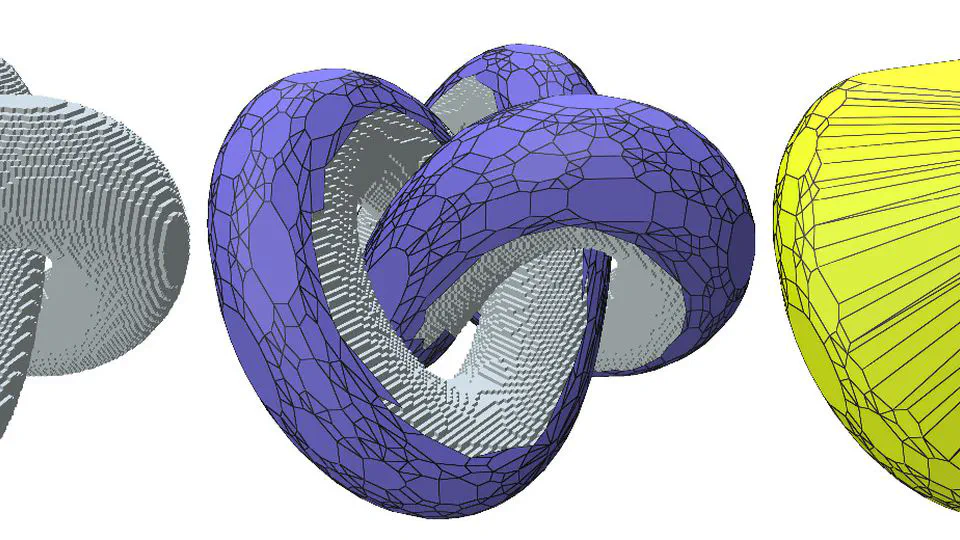

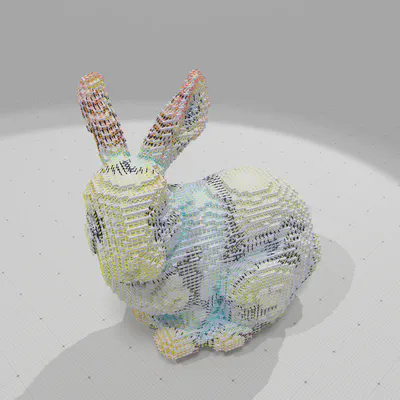

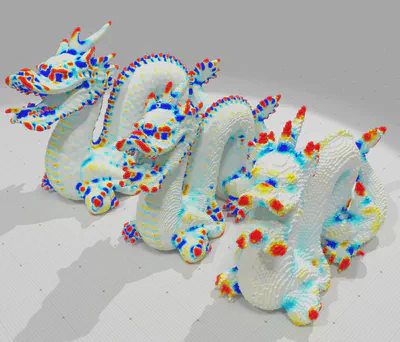

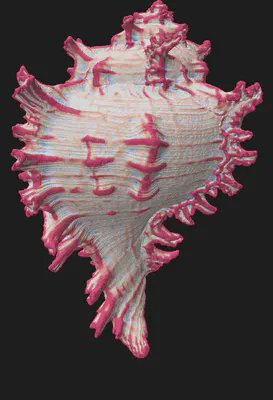

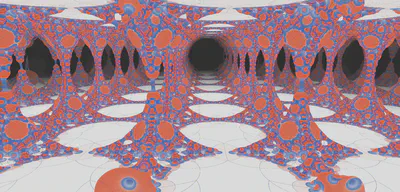

- Digital Geometry

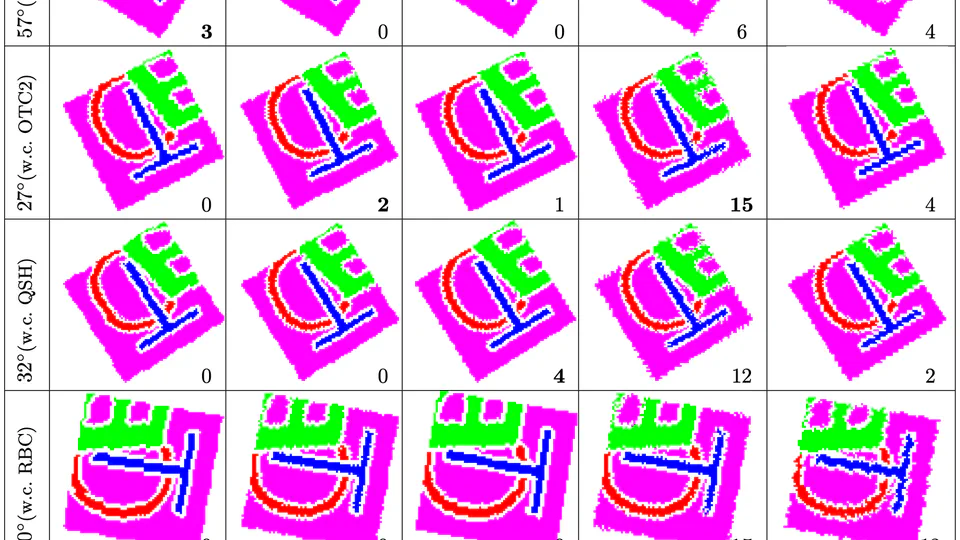

- Image analysis

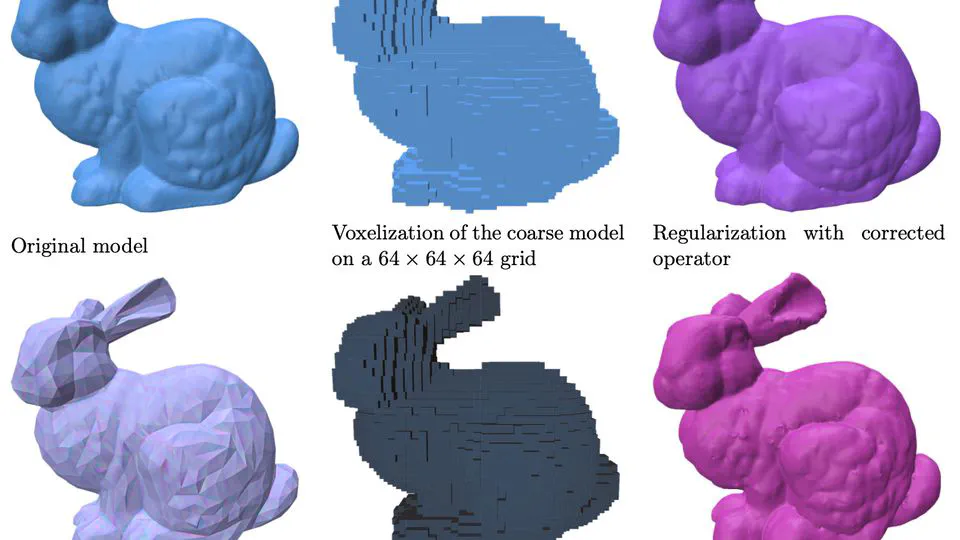

- Geometry processing

- Variational models

- Discrete calculus

HDR in Computer science (Habilitation à Diriger des Recherches), 2006

University Bordeaux 1

PhD in Computer Science, 1998

University Joseph Fourier

MSc in Computer Science, 1994

University Joseph Fourier

Engineer in Applied Mathematics and Computer Science, 1994

ENSIMAG School of Engineering

Corrected curvature measures

Année "Géométrie" 2023-2024 : bilan et prospective

Cross Border Meeting on Discrete and Computational Geometry and Applications

|  |

|  |

|  |

|  |

|  |

|  |

jacques-olivier.lachaud_@_univ-smb.fr

+33 4 79 75 86 42

Université Savoie Mont Blanc, Laboratoire de Mathématiques, campus scientifique.

Le Bourget-du-Lac, F-73376, France

https://maps.app.goo.gl/pzsdHsp64kGQzwyy8

Enter Building “Le Chablais” (number 21), and take the stairs to Office 104 on Floor 1